Last night I stumbled upon SampleData.io, a website that offers sample datasets which you can use for testing or demo purposes. Which is quite unique, since proper sample data is rare to find.

Local first and last names are used to populate the datasets. All records contain real addresses and fictional e-mail addresses, telephone numbers (with local prefix) and IP addresses. Latitude and longitude coordinates (geolocations) are included, which correspond to the real addresses. And also credit card numbers, expiry dates, CVV’s and issuers are included. Credit card numbers are valid according to the Luhn-algorithm.

Here are a few examples, like for instance the global sample dataset:

Here is an overview of all unique addresses in Europe:

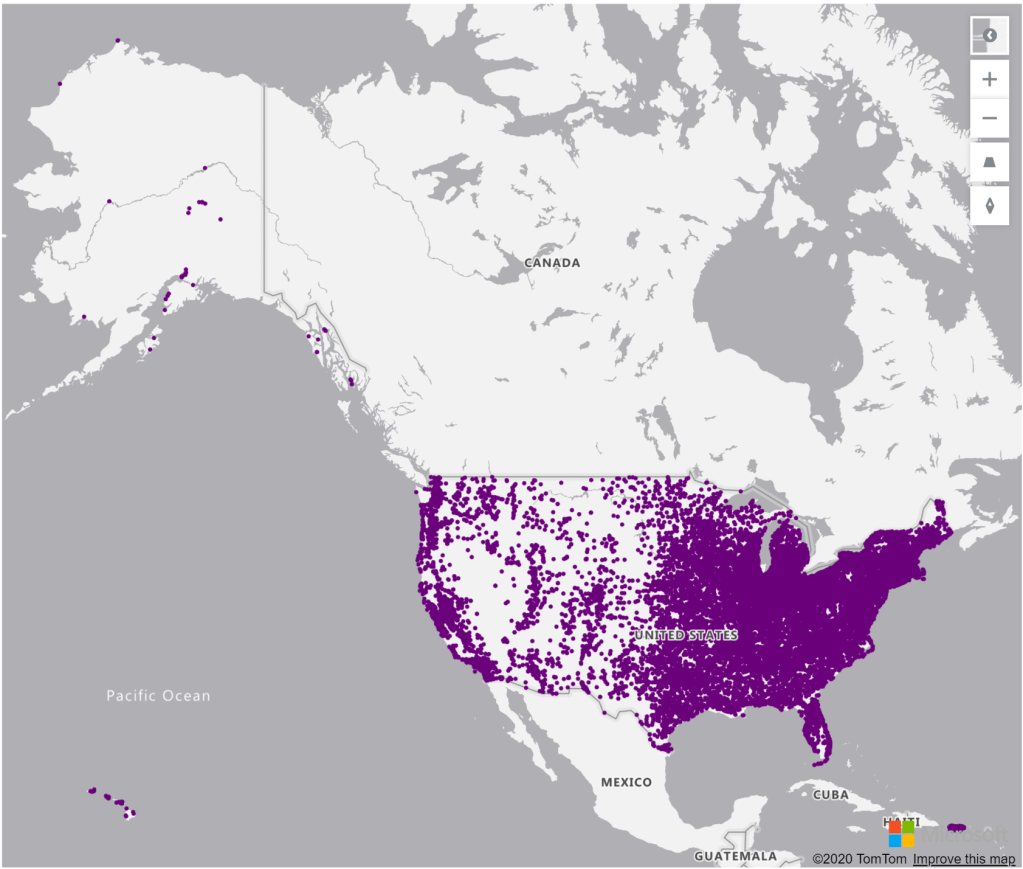

And last but not least, all unique addresses in the United States of America:

With 80 downloadable dataset, almost all Western countries are available as a download. Starting from 2.500 records up to 250.000 records with unique (real) addresses and its geolocation (latitude and longitude coordinates). Instant delivery via e-mail, including a receipt for business expenses.

Oh…and don’t forget to click ‘Preview‘ on the Download-page to download a small sample file in CSV format.

So get your Power BI reports ready for some testing! With these geolocations (latitude and longitude coordinates), you’re able to build nice geographical (demo) reports.

Don’t forget the applications for Data Science, since these dataset can be used to test your Machine Learning models. Think about Dynamic Pricing, Product Recommendations and Fraud Detection?